Wouldn’t it be cool if on top of your blendshapes or any other deformations, you could have additional clusters that allow you to sculpt the shape you want even further.

Doing that isn’t actually that hard, just make sure the cluster is the last deformer being evaluated and you’re pretty much set. The hard thing is having those deformers follow along with the already deformed mesh. I’ve been trying to find a way to do this for about a week now. I’ve tried several implementations that I got from several books, but none of them seemed to work for me (maybe i’m just a stupid ass that should learn to read fine print, …sue me). These methods often just allowed the cluster to follow a joint instead of the actual mesh it was deforming. Others just give you weird transformations errors or cycle errors…YUCKIE!

Anyway, here’s a new way that I worked out. It seems to work quite well and it doesn’t take a rocket scientist to set it up. (thank god, I’ve heard those guys are really expensive).

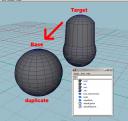

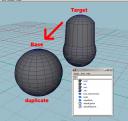

This is my test-setup, the almighty sphere!

We have 3 spheres here (I know, you only see 2, but that’s because 2 of them are lying on top of eachother. With good reason!)

First of all, the “target” sphere represents any deformation you might apply to the base. Here this is just a simple blendshape going from the target to the base, but this can be anything (skinclusters, dynamics, etc…). Now let’s give a simple overview of how I’m gonna go about this.

I’m going to attach the clusters (the refinement controller) to the base. But I’m NOT going to let these deformers themselves deform the base, i’m going to have them deform another mesh. (WHAAAA???). Ok well here’s the deal, you can’t have a cluster follow the same vertices it is deforming, you’ll wind up in a cycle since the cluster is, in essence, trying to follow itself.

The way we work around this is by duplicating the base, and making a blendshape from the base to the duplicated mesh. (this blendshape will pass all the deformations on the base onto the duplicated mesh). By then having the clusters deform this duplicated mesh, we avoid the cycle since these clusters exist outside of the deformation graph on the base.

Maybe some graphics will clear things up.

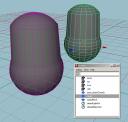

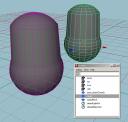

Basically, since we have a blendshape from the target to the base, activating the blendshape results in this.

Now, we need to have something that follows the mesh. A rivet or a follicle will work wonders here. I’m gonna use the rivet since it’s easy and doesn’t require maya unlimited. (for those of you who don’t know rivet.mel is a script that creates a locator which follows all deformations on 2 edges.)

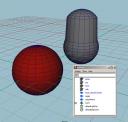

Okidoki, now we create a blendshape from the base to the duplicate of our base. This makes sure that the duplicate inherits all deformations from the base. I gave the duplicate a nice red color just for visual reference.

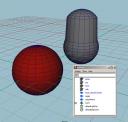

Now let’s create the refinement control. Select the verts you want deformed on the duplicate and create a cluster.

To get rid of the default “C” handle the cluster creates, create a nurbsSphere and make it the weighted node of the cluster (many texts describe this process, it’s just a matter inputting the .worldMatrix[0] of your control objects (nurbs sphere) into the .matrix attribute of your cluster node, and setting the “weighted node” attribute to be the name of the control object).

Also, make sure the “relative” checkbox on the cluster node is checked! This makes sure that the parent’s transformations aren’t accounted for in the cluster deformation.

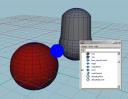

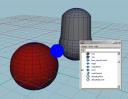

I gave my control object a nice blue color.

Make sure your cluster is at the top of the deformation stack.

Now just select the control Object, shift-select the rivet locator and hit P to parent your control under your rivet.

Voila! you can now just animate however you like (joints, blendshapes, other deformers.) and the cluster control will follow your animated mesh, making it easy and predictable for you to select and animate it to further hone your deformation.

He’s helpful too! If you don’t know who I’m talking about, Duncan Brinsmead is one of the scientists at Autodesk who is responsible for nCloth (together with Jos Stam, halleluja) paint effects and maya fluids. I’m guessing it’s pretty safe to say that this guy has a more than average IQ.

He’s helpful too! If you don’t know who I’m talking about, Duncan Brinsmead is one of the scientists at Autodesk who is responsible for nCloth (together with Jos Stam, halleluja) paint effects and maya fluids. I’m guessing it’s pretty safe to say that this guy has a more than average IQ.

“A shortfall signal is one that fails to reach its usual level of intensity. In some ways, it falls short of the expected.

“A shortfall signal is one that fails to reach its usual level of intensity. In some ways, it falls short of the expected.